Technology

Podcast: COVID-19 is helping turn Brazil into a surveillance state

Leading discussions about the global rules to regulate digital privacy and surveillance is a somewhat unusual role for a developing country to play. But Brazil had been doing just that for over a decade.

But in 2014 Edward Snowden’s bombshell about the US National Security Agency’s digital surveillance activities included revelations that the agency had been spying on Brazil’s state-controlled oil company Petrobras, and even on then-president Dilma Rousseff´s communications.

The leaks prompted the Brazilian government to adopt a kind of digital “Bill of Rights” for its citizens, and lawmakers would go on to pass a data protection measure closely modeled on Europe’s GDPR.

But the country has now shifted toward a more authoritarian path.

Last October, President Jair Bolsonaro signed a decree compelling all federal bodies to share the vast troves of data they hold on Brazilian citizens and consolidate it in a centralized database, the Cadastro Base do Cidadão (Citizen’s Basic Register).

The government says it wants to use the data to improve public services and cut down on crime, but critics warn Bolsonaro’s far-right leadership could use the data to spy on political dissidents.

For the September/October issue of MIT Technology Review, journalist Richard Kemeny explains how the government’s move to centralize civilian data could lead to a human rights catastrophe in South America’s biggest economy. This week on Deep Tech, he joins our editor-in-chief, Gideon Lichfield, to discuss why the country’s slide into techno-authoritarianism is being accelerated by the coronavirus pandemic.

Check out more episodes of Deep Tech here.

Show notes and links:

- Brazil is sliding into techno-authoritarianism August 19, 2020

- Brazilian Foreign Policy Towards Internet Governance February 6, 2020

Full episode transcript:

Anchor for CBN News: A new report says the National Security Agency spied on the presidents of Brazil and Mexico. The journalist who broke the NSA domestic spying story has told a Brazilian news program that emails from both leaders were being intercepted. He based his report on information from NSA leaker Edward Snowden.

Gideon Lichfield: The Edward Snowden leaks in 2014 revealed that the US National Security agency had been spying on people’s communications all around the world. And that one of the countries where it collected the most data was Brazil.

That very same year, partly in response to Snowden’s leaks, the Brazilian government adopted the Marco Civil—a kind of internet “bill of rights” for its citizens. And in 2018, Brazil’s congress would pass a data protection law closely modeled on Europe’s ground-breaking GDPR.

Just two years later, though, things look very different. Brazil has been on a techno-authoritarian streak.

Last October, President Jair Bolsonaro signed a law compelling federal bodies to share most of the data they hold on Brazilian citizens and consolidate it in a vast, centralized database.

This includes data on everything from employment to health records to biometric information like your face and voiceprint.

The government says all this should help improve public services and fight crime, but under a far-right president who has clamped down on civil liberties, it looks more like a way to make it easier to spy on dissidents.

Today, I’m talking to Richard Kemeny, a journalist based in Sao Paulo. His story in our latest issue—the techno-nationalism issue—explains how the coronavirus pandemic appears to be accelerating Brazil’s slide toward a surveillance state.

I’m Gideon Lichfield, editor-in-chief of MIT Technology Review, and this is Deep Tech.

So Richard, Brazil has this history of being pretty advanced on internet governance and on digital civil rights. Tell us a bit about that. How did that begin?

Richard Kemeny: Sure, I mean, you know, way back in the nineties, when all things internet were just kind of kicking off, Brazil was actually quite a progressive, leading voice in the conversation. When internet was working its way into society, Brazil set up a body known as the Internet Steering Committee, whose job was to smooth the transition of the internet in society and kind of improve its development.

Gideon Lichfield: Okay. So then fast forward to 2014, Edward Snowden leaked the intelligence files about the NSA spying on people around the world, and that made a big impact in Brazil, right? Why was that?

Richard Kemeny: It did. One of the main sticking points was it was found that the NSA had hacked Petrobras, the state-owned oil company. And this was seen as an affront to Brazil particularly because they’re an ally of the United States. And so this led to that government that was then led by Dilma Rousseff setting up the Marco Civil which is essentially a bill of rights for the internet. And it was actually taken as a model for other countries, such as Italy, who wanted to set up something similar.

Gideon Lichfield: And then a few years later, Brazil passed a data privacy law that was also quite forward looking.

Richard Kemeny: Correct. It was closely modeled on Europe’s GDPR. The Lei Geral de Proteção de Dados. LGPD. So the LGPD also establishes the rights to privacy for citizens data and also protects them in a way that they know the way in which their data is being used and that it’s used in a proportionate way.

Richard Kemeny: And so Brazilian society was kind of founded on this culture of openness and transparency regarding these kinds of issues. Something that involved public debate and public contribution. And that’s something that’s changed in recent years.

Gideon Lichfield: And when did this change begin?

Richard Kemeny: So it started after the impeachment of Dilma Rousseff who left in 2016. And brought an introduction of president Michel Temer. He brought in the LGPD but vetoed some of it. So watered it down in certain ways specifically regarding the punishment of bodies that contravene the law. And this tendency was continued then under the government of Jair Bolsonaro who’s voted into office in 2018.

Gideon Lichfield: Now Bolsonaro is very far right. And he’s been taking a lot of things in Brazil in a more authoritarian direction. What’s he done as regards data rights and digital rights?

Richard Kemeny: Certainly the biggest move came in October, 2019, when more or less out of the blue president Bolsonaro signed a decree essentially compelling all public bodies to start sharing citizen information between each other more or less freely. This took many observers by surprise. It was something that wasn’t debated publicly. Many people didn’t really see it coming.

Gideon Lichfield: So why does the government say that it needs all of this centralized data?

Richard Kemeny: So the rationale behind the decree—according to the public line —was to improve the quality and consistency of the data that the government holds on citizens. One of the effects of the pandemic was to shine a light on millions of citizens who were in fact previously invisible to the government. Not registered on any public system. by the end of April, around 46 million had registered to apply for emergency financial aid.

Gideon Lichfield: What sorts of agencies are sharing data and what kinds of data are they sharing?

Richard Kemeny: So this includes all public bodies that hold information on citizens. And the data is very broad. It ranges everything from facial information, biometric characteristics, voice data, even down to the way that people walk and all of this information is, being poured into a vast database—which was also set up under the decree. The Cadastro Base do Cidadão or Citizens Basic Register.

Gideon Lichfield: Okay. So all of these federal bodies can now share data. Have there been any examples that we know of, of agencies swapping data in this way?

Richard Kemeny: One of the things that came out in June was articles leaked to The Intercept, which showed that ABIN, the security agency, requested the data of 76 million Brazilian citizens. All of those who hold driving licenses. So this was seen as perhaps the first known use of this degree to enact a large data grab.

Gideon Lichfield: In other words, the Brazilian national security agency basically just hoovered up the data of 76 million people without having to justify why it wanted it?

Richard Kemeny: Exactly. It seemed like there was no need for the justification because of the decree. And this kind of a vast amount of information was widely seen as disproportionate.

Gideon Lichfield: Brazil has also been using surveillance technology a lot more, right? Can you talk a bit about that?

Richard Kemeny: Yeah, that’s correct. Like many countries around the world, Brazil has steadily been increasing both the amount of, and use of, surveillance equipment. There was a marked increase when Brazil hosted the football World Cup in 2014 and followed by the Olympics in 2016. This is when surveillance technology was really brought in. And obviously since then the technology has stuck around.

Gideon Lichfield: And when we say surveillance technology, what kind of technology are we talking about?

Richard Kemeny: mostly facial recognition technology. Facial recognition, cameras that were set up throughout cities to monitor crime. And indeed police forces have been increasingly using this technology to spot signs of crime. Obviously this is useful in the countries, such as Brazil, where murder rates are about five times the global average and crime has been seen as a major problem in the society.

Gideon Lichfield: In our other podcast, in Machines We Trust, we’ve been looking at some of the problems of using face recognition for policing. What are the issues for it in Brazil?

Richard Kemeny: So one of the problems is that facial recognition technology is largely being developed by researchers in the West and largely created through the use of information from white faces. So in a country like Brazil, where the majority of the population are black or Brown, this can pose serious problems when it comes to misidentification of criminals.

Richard Kemeny: Especially in certain areas where crime is high and levels of poverty are also high. You can imagine a situation where a poor black man is misidentified through facial recognition technology. He can’t afford a lawyer for himself. That’s certainly a situation that no one wants to be put in.

Gideon Lichfield: And this centralized data register, the Cadastro, obviously it’s raising concerns because it allows government agencies to get as much data as they want on whoever they want. What sorts of other worries are there about it?

Richard Kemeny: I mean, one of the main concerns with having this much consolidated and centralized information is that it basically becomes a huge honeypot for criminals.

So Brazil has an unfortunate history of sensitive data finding its way onto the internet. In 2016, São Paulo accidentally exposed the medical information of 365,000 patients from the public health system.

Then in 2018, the tax ID numbers of 120 million people were left out on the internet. And this was basically due to someone accidentally renaming the file the wrong way. So this kind of thing, if you imagine, having the centralized database with the most amount of data possible about citizens, all in one place, either being hacked by criminals or just following some kind of accidental leak, presents a massive security risk. And one that many feel is not warranted for the potential benefits it could bring.

Gideon Lichfield: So we have this big centralized citizens’ data register and we have the increasing use of surveillance and face recognition. Put these two together, what risks does that create?

Richard Kemeny: So taken together, this vast database coupled with the increase in surveillance technology that’s being used more freely and more widely throughout the country is something that I actually spoke about with Rafael Zanatta, who is the director of NGO Data Privacy Brazil. He says he worries about the data of citizens who benefit from public services like welfare being used to build political profiles that can be targeted by the government.

Rafael Zanatta: So it is quite possible to do very powerful and precise inferences about political association based on the richness of the data and the beneficiaries of public policies. So we believe that the kind of risk that we do have now with the Cadastro Base do Cidadão it is close to the one we had in the seventies during the military dictatorship in the sense that it is possible for some parts of the intelligence community of the army to understand political patterns, patterns of association, and to do inferences based on this unified databases.

And this is very disturbing about Brazil because in most of the democratic nations, the intelligence community is worried about threats coming from the outside. So, the intelligence community worries about who are the terrorist groups or the foreigners, or external threats that might challenge the institutions in the democratic framework of a country. But in Brazil, what we’ve been seeing in the past years is that the intelligence community is looking like domestically. They believe that the threats are inside. They believe that they should actively monitor Instagram accounts, Twitter accounts and social networks to understand who are the people making protests and opposition within the country.

Richard Kemeny: Rafael says the effects of Brazil’s continued slide into techno-authoritarianism could be catastrophic for human rights in the near future.

Rafael Zanatta: Imagine that you could go out for a walk in your hometown in a small city in Brazil, and you go through a public square cause you want to buy some popcorn or some ice cream. And then a facial recognition camera in that square captures your face, sends it to a local database of local security. And that local database of your municipality, your city hall, is linked to the ministry of justice to the federal government.

And they cross that database and they identify that actually you’ve been persecuted for a political crime. An attempt to disrupt the federal government or something like that, because you were actively involved in some Instagram and Twitter conversations tha are considered dangerous. And there was in fact a criminal investigation upon you that you were not aware of.

And then this federal database that is linked to the municipal one goes at the same time to the Cadastro Base do Cidadão which has a link to the database operated by ABIN and a secondary intelligence community of the army that immediately recognizes that you are a threat and immediately sets up an alarm system that directs police officers to go there and take the picture of you once again and arrest you and take you to custody. This is not the life I want my children to have.

That’s why it is so important to have public commitments of the government with the data protection law. Because it is a legislation that really reinforces the idea of purpose limitation and that one specific unity of the government has a specific mandate to process the data only for one specific reason.

Gideon Lichfield: So there is this vast centralized database now, but Brazil also still has this data protection law that it passed in 2018. Those two things seem contradictory. How do they work together?

Richard Kemeny: So in theory, the data protection law should insure the correct and proportionate use of citizen data. This means that data will be taken by a body, used in a specific way, for a specific purpose, and then deleted or destroyed or given back afterwards. And it should also ensure that citizens know exactly how their data is being used. And in theory that they should be able to agree to its use for this reason. Of course the extent to which this law can actually regulate the use of sets and information depends on the way that the law is implemented and the way that it’s monitored.

So some of the people I spoke to while reporting this piece explained that there are some inconsistencies between the decree that brought in this database and the data sharing and the new data law, the LGPD. For example biometric data is something that’s seen as highly sensitive under the LGPD, but in the decree could still be shared between bodies.

And in practice, it’s not exactly clear which law is going to trump which and how this information will be monitored. So the government had tried to delay the implementation of LGPD until May next year, citing reasons such as businesses not being able to prepare for the law during the pandemic. Since the article has been published, the Senate has actually voted against the government and the law will now come into effect this year.

So there’s one body that monitors how the database is used. There’s another one, the monitors, how the data protection law is implemented. And then there’s a separate advisory body on top of that. Essentially data police to monitor the data police. So in theory, these multiple layers should provide a high level of independence and a high level of adherence to these laws. However the strength of these independent bodies completely depends on who is put into these positions and ultimately the decisions for those lie within the office of the president.

Gideon Lichfield: How has the pandemic accelerated this instinct of the government to amass more data and crackdown surveillance?

Richard Kemeny: So we have seen this trend for data grabbing increased during the pandemic. In April, the president signed a decree, imploring telecoms companies to handover data on 226 million Brazilian citizens to the state statistical organization under the pretext of monitoring income and employment during the pandemic.

This was seen as a hugely disproportionate grab for data largely because, in the past, the amount of information needed to carry out this task was far smaller. And added to that, the fact that the federal government or the president have denied the severity of the virus. This means that this seems far more just about grabbing as much information as possible. And in the end it was seen as unconstitutional and disproportionate and the Supreme court struck it down.

Gideon Lichfield: So it seems like Brazil is kind of at an inflection point. It’s got this increasingly authoritarian tendency, which is consolidating data and increasing surveillance, but it still has a strong civil society and a court system that’s pushing back. How do you think this might play out?

Richard Kemeny: Absolutely. So on the spectrum in the way that countries manage citizen data, privacy and surveillance; If you have China on the one end, a surveillance state, which in effect controls the behavior of the citizens. And on the other end: Somewhere progressive like Estonia where citizen information is decentralized and no one institution holds all of the data in its institutional basket. I see Brazil carving its own path down the middle, because you do have these pressures from this federal government of data grabbing, of consolidation of data and of increasing surveillance. But on the other hand, you do have these strong contracting balances. Congress and the Senate. The court system. And a wealth of NGOs that are pushing back on these tendencies to the government almost at every turn.

Gideon Lichfield: That’s it for this episode of Deep Tech. This is a podcast just for subscribers of MIT Technology Review, to bring alive the issues our journalists are thinking and writing about. You’ll find Richard Kemeny’s article “One register to rule them all” in the September issue of the magazine.

Deep Tech is written and produced by Anthony Green and edited by Jennifer Strong and Michael Reilly. I’m Gideon Lichfield. Thanks for listening.

Technology

California looks to eliminate gas guzzlers—but legal hurdles abound

California governor Gavin Newsom made a bold attempt today to eliminate sales of new gas-guzzling cars and trucks, marking a critical step in the state’s quest to become carbon neutral by 2045. But the effort to clean up the state’s largest source of climate emissions is almost certain to face serious legal challenges, particularly if President Donald Trump is reelected in November.

Newsom issued an executive order that directs state agencies, including the California Air Resources Board, to develop regulations with the “goal” of ensuring that all new passenger cars and trucks sold in the state are zero-emissions vehicles by 2035. That pretty much limits future sales to electric vehicles (EVs) powered by batteries or hydrogen fuel cells. Most new medium- and heavy-duty vehicles would need to be emissions free by 2045.

These shifts could be accomplished through restrictions on internal-combustion-engine vehicles, or through subsidies or other policy instruments that tighten or become more generous, over time. If those rules are enacted, it would be one of the most aggressive state climate policies on the books, with major implications for the auto industry.

The roughly 2 million new vehicles sold in the state each year would eventually all be EVs, providing a huge boost to the still nascent vehicle category.

“California policy, especially automotive policy, has cascading effects across the US and even internationally, just because of the scale of our market,” says Alissa Kendall, a professor of civil and environmental engineering at the University of California, Davis.

Indeed, the order would mean more auto companies would produce more EV lines, scaling up manufacturing and driving down costs. The growing market would, in turn, create greater incentives to build out the charging or hydrogen-fueling infrastructure necessary to support a broader transition to cleaner vehicles.

The move also could make a big dent in transportation emissions. Passenger and heavy-duty vehicles together account for more than 35% of the state’s climate pollution, which has proved an especially tricky share to reduce in a sprawling state of car-loving residents (indeed, California’s vehicle emissions have been ticking up).

But Newsom’s executive order only goes so far. It doesn’t address planes, trains, or ships, and it could take another couple of decades for residents to stop driving all the gas-powered vehicles already on the road.

Whether the rules go into effect at all, and to what degree, will depend on many variables, including what legal grounds the Air Resources Board uses to justify the policies, says Danny Cullenward, a lecturer at Stanford’s law school who focuses on environmental policy.

One likely route is for the board to base the new regulations on tailpipe emissions standards, which California has used in the past to force automakers to produce more fuel-efficient vehicles, nudging national standards forward. But that approach may require a new waiver from the Environmental Protection Agency allowing the state to exceed the federal government’s vehicle emissions rules under the Clean Air Act, the source of an already heated battle between the state and the Trump administration.

Last year, Trump announced he would revoke the earlier waiver that allowed California to set tighter standards, prompting the state and New York to sue. So whether California can pursue this route could depend on how courts view the issue and who is in the White House come late January.

It’s very likely that the automotive industry will challenge the rules no matter how the state goes about drafting them. And the outcome of those cases could depend on which court it lands in—and perhaps, eventually, who is sitting on the Supreme Court.

But whatever legal hurdles they may face, California and other states need to rapidly cut auto emissions to have any hope of combating the rising threat of climate change, says Dave Weiskopf, a senior policy advisor with NextGen Policy in Sacramento.

“This is what science requires, and it’s the next logical step for state policy,” he says.

Update: This story was updated to clarify that the order wouldn’t necessarily achieve its goals through a ban on internal-combustion-engine vehicles.

Technology

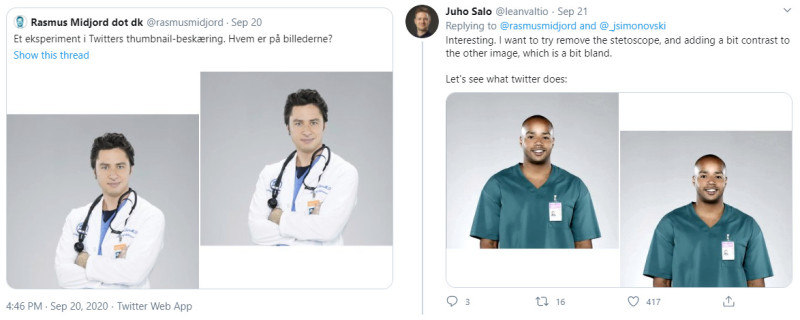

Community Testing Suggests Bias in Twitter’s Cropping Algorithm

With social media and online services are now huge parts of daily life to the point that our entire world is being shaped by algorithms. Arcane in their workings, they are responsible for the content we see and the adverts we’re shown. Just as importantly, they decide what is hidden from view as well.

Important: Much of this post discusses the performance of a live website algorithm. Some of the links in this post may not perform as reported if viewed at a later date.

Recently, [Colin Madland] posted some screenshots of a Zoom meeting to Twitter, pointing out how Zoom’s background detection algorithm had improperly erased the head of a colleague with darker skin. In doing so, [Colin] noticed a strange effect — although the screenshot he submitted shows both of their faces, Twitter would always crop the image to show just his light-skinned face, no matter the image orientation. The Twitter community raced to explore the problem, and the fallout was swift.

Intentions != Results

Twitter users began to iterate on the problem, testing over and over again with different images. Stock photo models were used, as well as newsreaders, and images of Lenny and Carl from the Simpsons, In the majority of cases, Twitter’s algorithm cropped images to focus on the lighter-skinned face in a photo. In perhaps the most ridiculous example, the algorithm cropped to a black comedian pretending to be white over a normal image of the same comedian.

Many experiments were undertaken, controlling for factors such as differing backgrounds, clothing, or image sharpness. Regardless, the effect persisted, leading Twitter to speak officially on the issue. A spokesperson for the company stated “Our team did test for bias before shipping the model and did not find evidence of racial or gender bias in our testing. But it’s clear from these examples that we’ve got more analysis to do. We’ll continue to share what we learn, what actions we take, and will open source our analysis so others can review and replicate.”

There’s little evidence to suggest that such a bias was purposely coded into the cropping algorithm; certainly, Twitter doesn’t publically mention any such intent in their blog post on the technology back in 2018. Regardless of this fact, the problem does exist, with negative consequences for those impacted. While a simple image crop may not sound like much, it has the effect of reducing the visibility of affected people and excluding them from online spaces. The problem has been highlighted before, too. In this set of images of a group of AI commentators from January of 2019, the Twitter image crop focused on men’s faces, and women’s chests. The dual standard is particularly damaging in professional contexts, where women and people of color may find themselves seemingly objectified, or cut out entirely, thanks to the machinations of a mysterious algorithm.

Former employees, like [Ferenc Huszár], have also spoken on the issue — particularly about the testing process the product went through prior to launch. It suggests that testing was done to explore this issue, with regards to bias on race and gender. Similarly, [Zehan Wang], currently an engineering lead for Twitter, has stated that these issues were investigated as far back as 2017 without any major bias found.

It’s a difficult problem to parse, as the algorithm is, for all intents and purposes, a black box. Twitter users are obviously unable to observe the source code that governs the algorithm’s behaviour, and thus testing on the live site is the only viable way for anyone outside of the company to research the issue. Much of this has been done ad-hoc, with selection bias likely playing a role. Those looking for a problem will be sure to find one, and more likely to ignore evidence that counters this assumption.

Efforts are being made to investigate the issue more scientifically, using many studio-shot sample images to attempt to find a bias. However, even these efforts have come under criticism - namely, that using an source image set designed for machine learning and shot in perfect studio lighting against a white background is not realistically representative of real images that users post to Twitter.

Twitter’s algorithm isn’t the first technology to be accused of racial bias; from soap dispensers to healthcare, these problems have been seen before. Fundamentally though, if Twitter is to solve the problem to anyone’s satisfaction, more work is needed. A much wider battery of tests, featuring a broad sampling of real-world images, needs to be undertaken, and the methodology and results shared with the public. Anything less than this, and it’s unlikely that Twitter will be able to convince the wider userbase that its software isn’t failing minorities. Given that there are gains to be made in understanding machine learning systems, we expect research will continue at a rapid pace to solve the issue.

Technology

Anti-Piracy Group Seeks Owners Of YTS, Pirate Bay And Others

The Alliance for Creativity and Entertainment (ACE) is attempting to uncover the owners of pirate sites like YTS, Pirate Bay, Tamilrockers, and several others.

For that purpose, ACE previously filed a DMCA subpoena that requires Cloudflare to unveil operators of several pirate sites. According to the latest report from Torrent Freak, a total of 46 high profile pirate websites have been named by the anti-piracy coalition.

ACE is an anti-piracy organization that crackdowns on the operators of pirate websites. Some of its members include Amazon, Netflix, Disney, and Paramount Pictures. The latest targets of this crackdown are pirate sites using Cloudflare. For the uninitiated, Cloudflare is a content delivery network (CDN) service provider used by millions of websites globally.

In the first half of 2020, Cloudflare received 31 such requests, concerning 83 accounts, most of which were related to adult sites.

The current subpoena targets 46 pirate websites, including some big fish like Pirate Bay, YTS, 1337x, 123Movies, and more. Once in action, it’ll force Cloudflare to reveal the IP addresses, emails, names, physical addresses, phone numbers, and payment methods of the people operating these websites.

Here is the list of domains that ACE wants to gather information on —

- yts.mx

- pelisplus.me

- 1337x.to F

- seasonvar.ru

- cuevana3.io

- kinogo.by

- thepiratebay.org

- lordfilm.cx

- swatchseries.to

- eztv.io

- 123movies.la

- megadede.com

- sorozatbarat.online

- cinecalidad.is

- limetorrents.info

- cinecalidad.to

- kimcartoon.to F

- tamilrockers.ws

- cima4u.io

- fullhdfilmizlesene.co

- yggtorrent.si

- time2watch.io

- online-filmek.me

- lordfilms-s.pw

- extremedown.video

- streamkiste.tv

- dontorrent.org

- kinozal.tv

- fanserial.net

- 5movies.to

- altadefinizione.group

- cpasmieux.org

- primewire.li

- primewire.ag

- primewire.vc

- series9.to

- europixhd.io

- oxtorrent.pw

- pirateproxy.voto

- rarbgmirror.org

- rlsbb.ru

- gnula.se

- rarbgproxied.org

- seriespapaya.nu

- tirexo.com

- cb01.events

- kinox.to

- filmstoon.pro

- descargasdd.net

Earlier in August, it was reported that torrent giant YTS had revealed user data with a law firm. This led to the users receiving letters from the law firm, asking them to pay around $1,000 for using the pirate site or be named in a lawsuit for piracy.

Recently, ACE has set out on a crusade to banish pirate websites. While there has been no significant action from the group in the past few years, 2020 is seeing increasing activity in reporting and controlling piracy.

The post Anti-Piracy Group Seeks Owners Of YTS, Pirate Bay And Others appeared first on Fossbytes.

-

Business2 months ago

Business2 months agoBernice King, Ava DuVernay reflect on the legacy of John Lewis

-

World News2 months ago

Heavy rain threatens flood-weary Japan, Korean Peninsula

-

Technology2 months ago

Technology2 months agoEverything New On Netflix This Weekend: July 25, 2020

-

Finance4 months ago

Will Equal Weighted Index Funds Outperform Their Benchmark Indexes?

-

Marketing Strategies9 months ago

Top 20 Workers’ Compensation Law Blogs & Websites To Follow in 2020

-

World News8 months ago

World News8 months agoThe West Blames the Wuhan Coronavirus on China’s Love of Eating Wild Animals. The Truth Is More Complex

-

Economy11 months ago

Newsletter: Jobs, Consumers and Wages

-

Finance9 months ago

Finance9 months ago$95 Grocery Budget + Weekly Menu Plan for 8