Technology

Software that monitors students during tests perpetuates inequality and violates their privacy

The coronavirus pandemic has been a boon for the test proctoring industry. About half a dozen companies in the US claim their software can accurately detect and prevent cheating in online tests. Examity, HonorLock, Proctorio, ProctorU, Respondus and others have rapidly grown since colleges and universities switched to remote classes.

While there’s no official tally, it’s reasonable to say that millions of algorithmically proctored tests are happening every month around the world. Proctorio told the New York Times in May that business had increased by 900% during the first few months of the pandemic, to the point where the company proctored 2.5 million tests worldwide in April alone.

I’m a university librarian and I’ve seen the impacts of these systems up close. My own employer, the University of Colorado Denver, has a contract with Proctorio.

It’s become clear to me that algorithmic proctoring is a modern surveillance technology that reinforces white supremacy, sexism, ableism, and transphobia. The use of these tools is an invasion of students’ privacy and, often, a civil rights violation.

If you’re a student taking an algorithmically proctored test, here’s how it works: When you begin, the software starts recording your computer’s camera, audio, and the websites you visit. It measures your body and watches you for the duration of the exam, tracking your movements to identify what it considers cheating behaviors. If you do anything that the software deems suspicious, it will alert your professor to view the recording and provide them a color-coded probability of your academic misconduct.

Depending on which company made the software, it will use some combination of machine learning, AI, and biometrics (including facial recognition, facial detection, or eye tracking) to do all of this. The problem is that facial recognition and detection have proven to be racist, sexist, and transphobic over, and over, and over again.

In general, technology has a pattern of reinforcing structural oppression like racism and sexism. Now these same biases are showing up in test proctoring software that disproportionately hurts marginalized students.

A Black woman at my university once told me that whenever she used Proctorio’s test proctoring software, it always prompted her to shine more light on her face. The software couldn’t validate her identity and she was denied access to tests so often that she had to go to her professor to make other arrangements. Her white peers never had this problem.

Similar kinds of discrimination can happen if a student is trans or non-binary. But if you’re a white cis man (like most of the developers who make facial recognition software), you’ll probably be fine.

Students with children are also penalized by these systems. If you’ve ever tried to answer emails while caring for kids, you know how impossible it can be to get even a few uninterrupted minutes in front of the computer. But several proctoring programs will flag noises in the room or anyone who leaves the camera’s view as nefarious. That means students with medical conditions who must use the bathroom or administer medication frequently would be considered similarly suspect.

Beyond all the ways that proctoring software can discriminate against students, algorithmic proctoring is also a significant invasion of privacy. These products film students in their homes and often require them to complete “room scans,” which involve using their camera to show their surroundings. In many cases, professors can access the recordings of their students at any time, and even download these recordings to their personal machines. They can also see each student’s location based on their IP address.

Privacy is paramount to librarians like me because patrons trust us with their data. After 9/11, when the Patriot Act authorized the US Department of Homeland Security to access library patron records in their search for terrorists, many librarians started using software that deleted a patron’s record once a book was returned. Products that violate people’s privacy and discriminate against them go against my professional ethos, and it’s deeply concerning to see such products eagerly adopted by institutions of higher education.

This zealousness would be slightly more understandable if there was any evidence that these programs actually did what they claim. To my knowledge, there isn’t a single peer-reviewed or controlled study that shows proctoring software effectively detects or prevents cheating. Given that universities pride themselves on making evidence-based decisions, this is a glaring oversight.

Fortunately, there are movements underway to ban proctoring software and ban face recognition technologies on campuses, as well as congressional bills to ban the US federal government from using face recognition. But even if face recognition technology were banned, proctoring software could still exist as a program that tracks the movements of students’ eyes and bodies. While that might be less racist, it would still discriminate against people with disabilities, breastfeeding parents, and people who are neuroatypical. These products can’t be reformed; they should be abandoned.

Cheating is not the threat to society that test proctoring companies would have you believe. It doesn’t dilute the value of degrees or degrade institutional reputations, and student’s aren’t trying to cheat their way into being your surgeon. Technology didn’t invent the conditions for cheating and it won’t be what stops it. The best thing we in higher education can do is to start with the radical idea of trusting students. Let’s choose compassion over surveillance.

Shea Swauger is an academic librarian and researcher at the University of Colorado Denver.

Technology

How Do Delivery Robots Work? How They Safely Deliver Your Packages

A distant future involving robotic package deliveries is now very much a reality. Advances in robotics, GPS tracking, automation, and navigation now mean you might not find a delivery person at your door with your package.

You might find a delivery robot instead.

With semi-autonomous robots beginning to enter the world, here’s a look at how delivery robots work.

What Is a Delivery Robot?

A delivery robot is an automated robot that brings your delivery directly to your door. These robots aren’t walking and talking humanoids; rather, these robots are cute delivery containers on six wheels, resembling giant (but friendly-looking!) bugs.

As with other delivery services, you make your purchases through an app with vendors based on your location. The robot trundles to the vendor—whether for shopping, food, drinks, or otherwise—and then it makes its way to your home.

How Does a Delivery Robot Work?

The primary example of delivery robots in action comes from Starship Technologies, a company based out of San Francisco with engineering facilities in Estonia and Finland. Starship Technologies is the brainchild of Skype co-founders Janus Friis and Anti Heinla, and they are currently the largest “last mile” delivery robot company around.

So, how does an autonomous delivery robot make a delivery?

The robots have a cargo capacity of around 9kg, can travel at a maximum speed of 4 mph, weigh around 25kg, and cost over $5,000 to manufacture. The delivery robot uses many of the same features as an autonomous car: 10 cameras for 360-degree vision, several ultrasonic sensors, GPS navigation, measurement units, gyroscopes, and much more.

How Do Delivery Robots Navigate?

The route between a vendor and a delivery point might look A-to-B if you plug the locations into a navigation app… but there are extra considerations for a delivery robot, including sidewalks, crossings, driveways, humans, animals, vehicles, and so on.

Starship’s robots calculate a route based upon the shortest distance and satellite imagery detailing the route. Each feature on the route (crossings, driveways, etc.) receives a time calculation, which the robot factors into route selection and delivery time.

Over time, the robots build a collaborative memory of an area, creating a wireframe map of constant features (buildings, crossings, statues, pathways, etc.) and ensuring that future journeys through the area are faster. The collaborative area-building makes navigation easier for every robot in the vicinity, with all units contributing to building out the local map.

But navigation isn’t always smooth sailing. Aside from regular navigational dilemmas, a malfunctioning robot comes with its own problems. For example, a Starship robot in Milton Keynes malfunctioned—and drove straight into a canal.

Does Anyone Control the Delivery Robot?

While the Starship Technology robots are autonomous, they are not disconnected from their operators. If a robot comes up against a significant challenge, such as a particularly massive curb (they can climb up and over regular sidewalk curbs), a human operator can take control and find a solution.

But for the most part, the robots are designed to take everything into account, focusing strongly on the sidewalk. Delivery robots sharing the same routes as pedestrians has all the potential for irritation.

All these potential issues are all considered, but the robots must learn the correct way to interact with humans. How many times have you faced the awkward situation of walking at a similar pace to someone just ahead of you? Do you speed up to pass, then continue walking faster? Do you slow down to give them time to move further ahead? Is your destination close enough so that you don’t need to overtake?

The delivery robots are learning how to solve these problems, as well as countless others.

If you want to get involved with robotics, check out these DIY robotic arm kits.

How Do You Order Take-Out From a Robot?

Starship’s robotic delivery team are currently operating in several US cities but in limited geographic areas. For example, you can order via Starship at Arizona State University, in Fairfax City, Virginia, or Modesto, California—but only in a limited area. The images below show the delivery areas for those respective locations:

If the vendor you want to order from and your delivery address are with the bounds of the robot, you can order from the Starship Delivery app. The app displays a list of vendors you can make an order with. You place your order, and a local delivery robot makes its way to the vendor to pick up your order. The robot then trundles to your front door. You track the delivery robot using an app, as well as unlock the secure cargo compartment, too.

The Starship Technologies delivery service costs $1.99 per delivery.

For vendors, the reality is slightly different. The delivery robots are cute and get the job done, but Starship’s terms of partnership can take up to a 20% cut per delivery, after a free month’s trial of the service.

Delivery Robots and COVID-19

The 2020 COVID-19 pandemic provided a new and interesting dynamic for Starship Technologies and its delivery robots. With huge numbers of people entering lockdown at differing times and with many people attempting to self-isolate and socially distance from the general public, the delivery robots present a perfect non-human delivery system.

In Milton Keynes, UK, the demand for robot deliveries rose significantly during the early stages of the UK COVID-19 lockdown. The US cities and university campuses also saw similar demand for robotic, almost zero-human interaction deliveries. For those on at-risk lists due to pre-existing conditions or healthcare workers struggling to purchase groceries after a long shift, robotic deliveries are a vital lifeline.

Does Amazon Have Delivery Robots?

Starship Technologies was the first company to use delivery robots as its core delivery method. Recognizing that last-mile delivery is a phenomenally large market is a masterstroke. But the world’s largest online marketplace, Amazon, isn’t far behind.

Amazon Scout is another six-wheeled robot that moves across sidewalks and crossings at walking pace, but this one brings your Amazon delivery directly to your door. Scout is currently available to Amazon customers in the area near Amazon’s headquarters in Seattle, as well as Irving, California, with recent trial expansions to Atlanta, Georgia and Franklin, Tennessee.

Delivery Robots Are Coming to Your Home

A friendly delivery robot bringing curry to your door is charming and is a reality for millions of people. The rollout of delivery robots won’t be overnight, and there are significant challenges for the delivery robotics sector, as well as rural communities.

If you like the sound of robots, check out these robots that’ll do your chores!

Image Credit: JHVEPhoto/Shutterstock

Read the full article: How Do Delivery Robots Work? How They Safely Deliver Your Packages

Technology

Facebook wants to make AI better by asking people to break it

The explosive successes of AI in the last decade or so are typically chalked up to lots of data and lots of computing power. But benchmarks also play a crucial role in driving progress—tests that researchers can pit their AI against to see how advanced it is. For example, ImageNet, a public data set of 14 million images, sets a target for image recognition. MNIST did the same for handwriting recognition and GLUE (General Language Understanding Evaluation) for natural-language processing, leading to breakthrough language models like GPT-3.

A fixed target soon gets overtaken. ImageNet is being updated and GLUE has been replaced by SuperGLUE, a set of harder linguistic tasks. Still, sooner or later researchers will report that their AI has reached superhuman levels, outperforming people in this or that challenge. And that’s a problem if we want benchmarks to keep driving progress.

So Facebook is releasing a new kind of test that pits AIs against humans who do their best to trip them up. Called Dynabench, the test will be as hard as people choose to make it.

Benchmarks can be very misleading, says Douwe Kiela at Facebook AI Research, who led the team behind the tool. Focusing too much on benchmarks can mean losing sight of wider goals. The test can become the task.

“You end up with a system that is better at the test than humans are but not better at the overall task,” he says. “It’s very deceiving, because it makes it look like we’re much further than we actually are.”

Kiela thinks that’s a particular problem with NLP right now. A language model like GPT-3 appears intelligent because it is so good at mimicking language. But it is hard to say how much these systems actually understand.

Think about trying to measure human intelligence, he says. You can give people IQ tests, but that doesn’t tell you if they really grasp a subject. To do that you need to talk to them, ask questions.

Dynabench does something similar, using people to interrogate AIs. Released online today, it invites people to go to the website and quiz the models behind it. For example, you could give a language model a Wikipedia page and then ask it questions, scoring its answers.

In some ways, the idea is similar to the way people are playing with GPT-3 already, testing its limits, or the way chatbots are evaluated for the Loebner Prize, a contest where bots try to pass as human. But with Dynabench, failures that surface during testing will automatically be fed back into future models, making them better all the time.

For now Dynabench will focus on language models because they are one of the easiest kinds of AI for humans to interact with. “Everybody speaks a language,” says Kiela. “You don’t need any real knowledge of how to break these models.”

But the approach should work for other types of neural network too, such as speech or image recognition systems. You’d just need a way for people to upload their own images—or have them draw things—to test it, says Kiela: “The long-term vision for this is to open it up so that anyone can spin up their own model and start collecting their own data.”

“We want to convince the AI community that there’s a better way to measure progress,” he adds. “Hopefully, it will result in faster progress and a better understanding of why machine-learning models still fail.”

Technology

In Praise Of The DT830, The Phenomenal Instrument You Probably Don’t Recognise For What It Is

If we had to make a guess at the single piece of electronic bench equipment owned by the highest proportion of Hackaday readers, it would not be a budget oscilloscope from Rigol, nor would it be a popular portable soldering iron like the TS100. Instead we’re guessing that it’s a multimeter, and not even the most accomplished one.

The DT830 is a genericised Chinese-manufactured 3.5 digit digital multimeter that can be had for an astonishingly low price. Less than a decent hamburger gets you an instantly recognisable plastic case with a chunky rotary range selector switch, and maybe a socket for some kind of transistor or component tester. Make sure that there is a 9 volt battery installed, plug in the pair of test leads, and you’re in business for almost any day-to-day electrical or electronic measurement. They’ve been available in one form or another for decades and have been the subject of innumerable give-aways and loss-leader offers, so it’s a reasonsble guess that you’ll have one somewhere. I have three as far as I know, they make great on-the-go instruments and have proved themselves surprisingly reliable for what they are.

Persuading You Is Going To Be A Tough Sell

If you talk about the DT830 in polite company, you might be greeted with snorts of derision. It’s not difficult to find reviews that tear one down and compare it to a more expensive meter, and not surprisingly find the pricey meter to be of higher quality.

And it’s certainly true that for a couple of dollars, you get a switch that won’t last forever and high voltage isolation that maybe isn’t quite up to spec. But I’m going to advance a different take on the DT830 that may surprise some of you: to me it’s a modern classic, an instrument that provides performance for its price that is nothing short of phenomenal. Because that pocket-money meter not only measures voltage, current, and resistance, it does so accurately and repeatably, and to compare that with what might have gone before is to show just much better a device it is.

Thirty years ago, a digital multimeter was an expensive item, and most multimeters were still analogue. A cheap multimeter was therefore invariably a small pocket analogue device, and the very cheap ones could be astoundingly awful. Accuracy and repeatability in reading wasn’t their strong point, and while I am a great fan of analogue multimeters when it comes to spotting dips and trends in tweaking analogue circuitry, even I can’t find reason to praise the inexpensive ones. By comparison the DT830 delivers reliable and accurate readings with a high-impedance input, something I would have given a lot for in 1985.

That Performance Is No Fluke

So given that it costs considerably less than a pint of beer in a British pub, how does such a cheap instrument do it? The answer is, by standing on the shoulders of giants. My colleague Anool Mahidharia supplied the answer here back in 2017 when he took a look at the Intersil 71XX series of integrated circuits; the archetypal DT830 contains an ICM 7106 3.5 digit digital panel meter chip, whose roots lie in a much more exclusive stratum of the industry.

(Despite there being a load of newer and more accomplished multimeter chips on the market I was surprised to find that none of them had found their way into the meters I’d opened.)

The ICM 7106 was based on work Intersil did in 1977 to produce the part in Fluke’s first portable DMM, the model 8020A.

So you’re not getting anywhere near the physical design or component quality of that expensive meter, but you are benefiting from the tech that made its ancestor a very good instrument for the 1970s. The dual-slope integrating ADC and precision reference are the same as the ones in many far more expensive meters, which is what makes the reading from your few-dollar DT830 one you can trust. Not bad for something you might dismiss as a piece of junk!

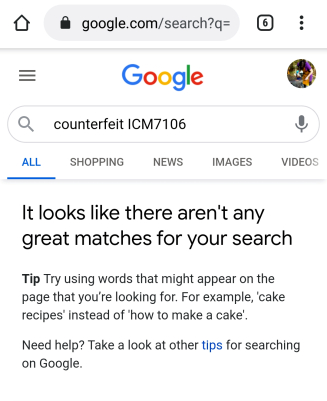

If there is something to be gleaned from this story, it is a very real demonstration of the power of semiconductor manufacturing. Assuming it has passed acceptable factory QA, every 7106 is as good as any other 7016, from the first one made by Intersil in the 1970s through to the unknown-origin chip hiding under an epoxy blob in my cheap meter. The manufacturer can skimp on every other component in the meter, but assuming that there’s no money in counterfeiting a 43-year-old chip that long ago left its premium product phase behind and has been manufactured by many sources over the years, they can’t skimp on the chip that powers it. To be an ICM7106, it must have the same features as the original from the 1970s, thus my bargain-basement meter still shares something that matters with one of far higher quality.

The DT830 multimeter, then. It may be a heap of junk, but it’s an astonishingly good heap of junk. I for one, salute it.

-

Business2 months ago

Business2 months agoBernice King, Ava DuVernay reflect on the legacy of John Lewis

-

World News2 months ago

Heavy rain threatens flood-weary Japan, Korean Peninsula

-

Technology2 months ago

Technology2 months agoEverything New On Netflix This Weekend: July 25, 2020

-

Finance4 months ago

Will Equal Weighted Index Funds Outperform Their Benchmark Indexes?

-

Marketing Strategies9 months ago

Top 20 Workers’ Compensation Law Blogs & Websites To Follow in 2020

-

World News8 months ago

World News8 months agoThe West Blames the Wuhan Coronavirus on China’s Love of Eating Wild Animals. The Truth Is More Complex

-

Economy11 months ago

Newsletter: Jobs, Consumers and Wages

-

Finance10 months ago

Finance10 months ago$95 Grocery Budget + Weekly Menu Plan for 8